AI 'HALLUCINATIONS' COST NEW YORK LAWYER $5K

"HALLUCINATED CASES MAY LOOK LIKE A REAL CASE BECAUSE THEY INCLUDE FAMILIAR-LOOKING REPORTER INFORMATION, BUT THEIR CITATIONS LEAD TO CASES WITH DIFFERENT NAMES, IN DIFFERENT COURTS AND ON DIFFERENT TOPICS—OR EVEN TO NO CASE AT ALL.”

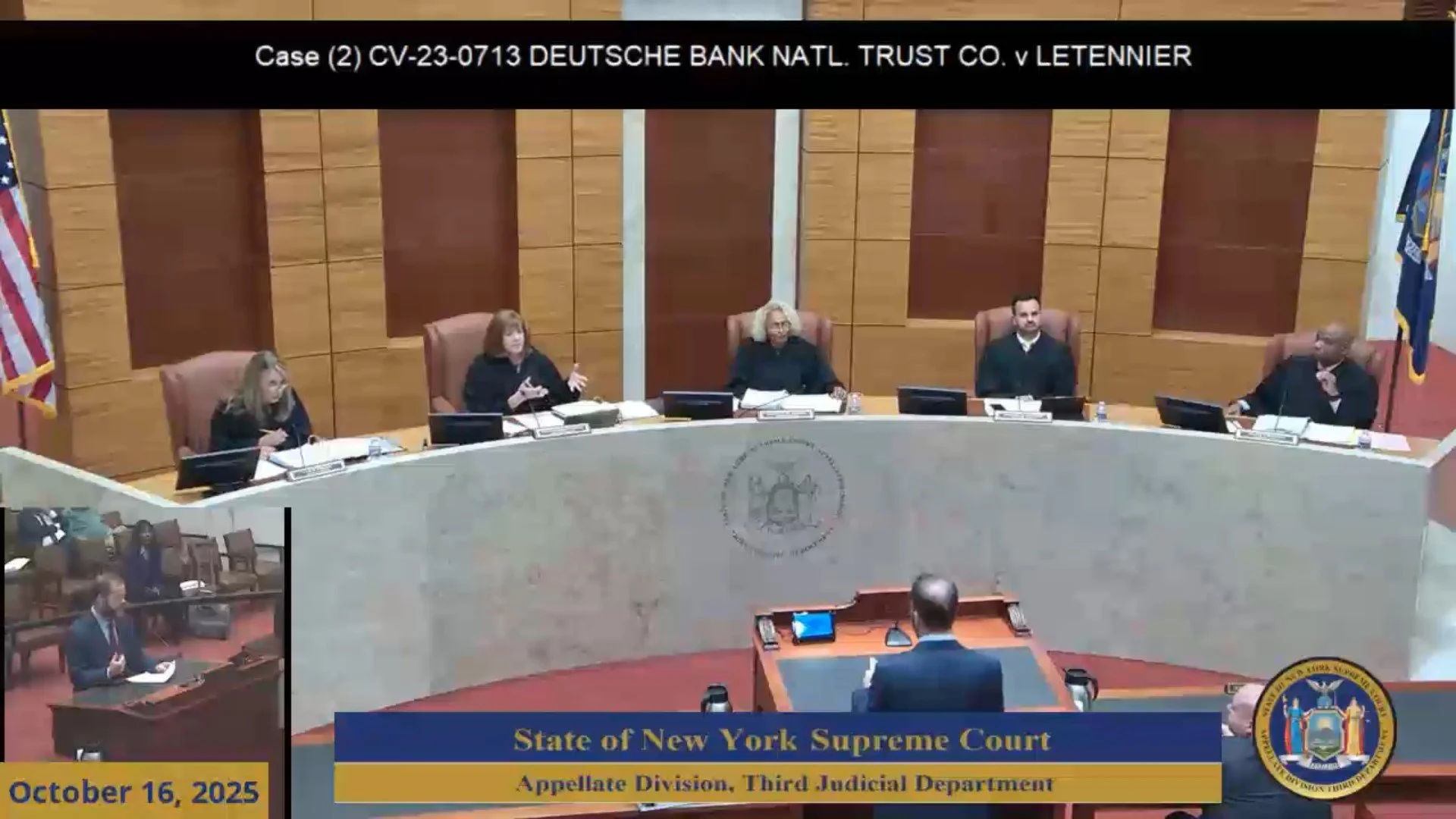

Attorney Joshua A. Douglass being grilled over his misuse of fake, AI-generated legal decisions in legal briefs he fillled: Photo credit: framegrab via the New York State Appellate Division, Third Dep’t.

MALONE, NEW YORK Jan. 9, 2025

A lawyer in New York arguing an appeal submitted legal filings filled with so many fake, AI-generated "decisions" he claimed was legal precedent in his client's favor that the Court fined him $5,000.

"In total, defendant's five filings during this appeal include no less than 23 fabricated cases, as well as many other blatant misrepresentations of fact or law from actual cases," the Appellate Division, Third Department in Albany ruled on Thursday.

For those additional "blatant misrepresentations," the court fined the lawyer, Joshua A. Douglass of Millerton, an additional $2,500—a total of $7,500.

The court also fined the person who hired Douglass to file the appeal, Jean LeTennier, $2,500 for pursuing what it called a "baseless" appeal in the first place.

The Court said the precedent-setting decision in the case of Deutsche Bank National Trust Company v LeTennier was "the first appellate-level case in New York addressing sanctions for the misuse" of generative AI.

READ THE DECISION BELOW

The misuse of fake, AI-generated legal "decisions" is particularly problematic in appeals.

In deciding appeals, appellate courts are bound by prior decisions made by the same court and influenced by decisions from other courts. These decisions are called legal "precedent," and they form the basic building blocks of any appeal or court decision.

The Appellate Division typically sits in five-judge panels. During oral argument of the appeal on Oct. 16, 2025, five judges grilled Douglass until he admitted he used AI in formulating the legal appeal briefs he filed in the case.

That reluctant admission prompted one of the judges, Molly Reynolds-Fitzgerald, to tell him: "But you got to check AI."

When Douglass replied "I do," Reynolds-Fitzgerald scoffed "Evidently not too well. Right?"

In the court's written decision, justice Lisa M. Fisher blasted Douglass for claiming "the nonexistent cases were citation or formatting errors that he would correct in his reply brief."

Instead of doing that, the decision says, Douglass submitted "more fake cases."

"Defendant's subsequent reply brief acknowledged that his 'citation of fictitious cases is a serious error' and that they are 'problematic,'" according to the decision, "but failed to offer any corrections or further explanation as previously stated."

Not only that, but Douglass "proceeded to include more fake cases and false legal propositions in two subsequent letters to this Court that requested judicial notice of a bankruptcy stay."

In fining Douglass, the appeals court was careful to point out that generative AI has a place in the legal world, but only if it's strictly double-checked. (Which makes it an extra step. Which means It makes legal research harder and makes it take more time. So why use it at all?)

"As did the shift from digest books to online legal databases, generative artificial intelligence (hereinafter GenAI) represents a new paradigm for the legal profession, one which is not inherently improper," the court wrote.

At the same time, the court cautioned "attorneys and litigants must be aware of the dangers that GenAI presents to the legal profession. At the forefront of that peril are AI 'hallucinations.'"

A known hazard in the legal world, AI "hallucinations" occur "when an AI database generates incorrect or misleading sources of information due to a 'variety of factors, including insufficient training data, incorrect assumptions made by the model, or biases in the data used to train the model.'"

"Hallucinated cases may look like a real case because they include familiar-looking reporter information," the court explained, "but their citations lead to cases with different names, in different courts and on different topics—or even to no case at all."

"Even where GenAI provides accurate case citations," the court's decision concluded, "it nonetheless may misrepresent the holdings of the cited cases—often in favor of the user supplying the query."

While mistakes can honestly be made with AI, the court found Douglass's excuses for the fake AI-generated decisions he cited in his legal filings appeared "incredible."

"We are most troubled," the court wrote, "that more than half of the fake cases offered by defendant came after he was on notice of such issue." His "reliance on fabricated legal authorities grew more prolific as this appeal proceeded."

"Rather than taking remedial measures or expressing remorse," Douglass "essentially doubled down during oral argument on his reliance of fake legal authorities as not 'germane' to the appeal."

The court said it hoped the fines deterred "future frivolous conduct by defendant and the bar at large."

"To be clear," the court concluded, "attorneys and litigants are not prohibited from using GenAI to assist with the preparation of court submissions."

But as "with the work from a paralegal, intern or another attorney," the court concluded, "the use of GenAI in no way abrogates an attorney's or litigant's obligation to fact check and cite check every document filed with a court."

Douglass did not respond to an emailed request for comment.

For tips or corrections, The Free Lance can be reached at jasonbnicholas@gmail.com or, if you prefer, thefreelancenews@proton.me.